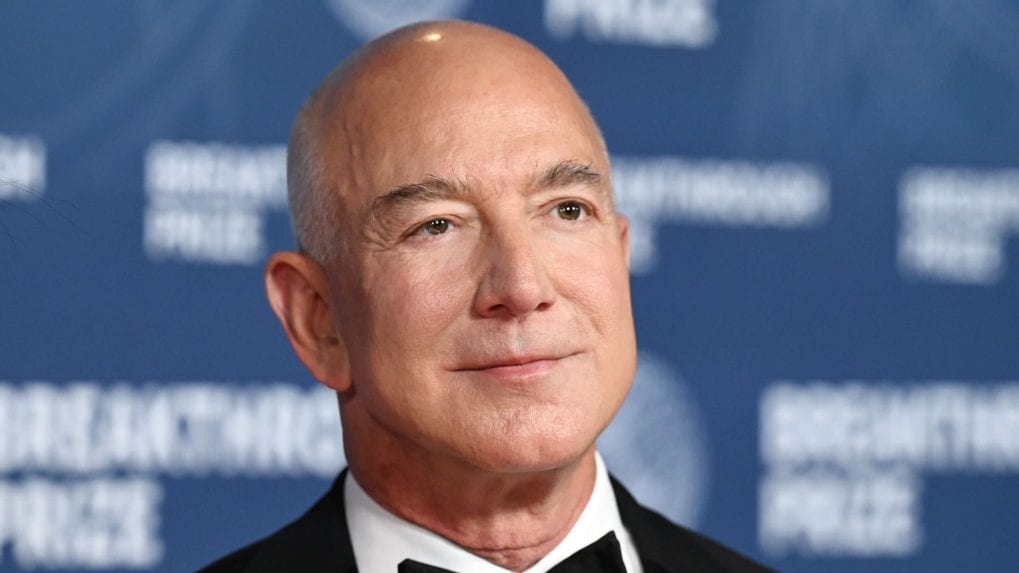

Jeff Bezos says Big Tech’s AI data centre rush is repeating a century-old mistake

At the DealBook Summit, the Amazon founder likened today’s AI infrastructure boom to factories generating their own electricity before public grids emerged.

ADVERTISEMENT

Jeff Bezos has cautioned that the technology industry’s aggressive push to build company-owned data centres for artificial intelligence is unsustainable, warning that the strategy repeats a historical miscalculation made during the early days of industrialisation.

Speaking at the 2026 New York Times DealBook Summit, the Amazon founder said today’s AI infrastructure race resembles how factories in the early 20th century once generated their own electricity because centralised power grids did not yet exist. Once shared grids became widespread, those private power plants quickly became obsolete.

“Right now, everybody is building their own data centre, their own generators essentially. And that’s not going to last,” Bezos said.

He illustrated the point by recalling a visit to a 300-year-old brewery in Luxembourg that once ran its own power station, an arrangement that eventually became inefficient once public electricity networks emerged.

Also read: Amazon auto-upgrades Echo devices to Alexa Plus, leaving users divided

Bezos argued that AI computing will follow a similar trajectory, shifting away from fragmented, company-specific facilities towards large-scale, centralised cloud platforms. Services such as Amazon Web Services, he said, are better positioned to deliver higher efficiency, improved utilisation and lower overall costs compared with firms operating isolated infrastructure.

His comments come as the global energy footprint of AI continues to grow rapidly. Data centres consumed about 415 terawatt-hours of electricity worldwide in 2024, accounting for roughly 1.5 percent of global demand. By 2030, that figure is projected to rise to around 945 terawatt-hours, nearly equivalent to Japan’s total electricity consumption. In the US, data centres could represent as much as 12 percent of national power demand, up from about 4 percent today.

AI workloads are significantly more energy-intensive than conventional computing. A single query to a large language model can require nearly ten times the energy of a standard web search, while training one large AI system can consume as much electricity annually as roughly 200 average US households.

Also read: OpenAI’s rumoured ‘Sweetpea’ device could take on AirPods with always-on AI

These rising demands have triggered an international scramble for power, with technology companies pursuing nuclear energy agreements, renewable projects and even experimental concepts such as space-based data centres to secure future supply.

Bezos is not alone in identifying energy as the central constraint on AI growth. Microsoft CEO Satya Nadella has said power shortages are leaving advanced chips underutilised, while OpenAI CEO Sam Altman has argued that breakthroughs in fusion or ultra-cheap solar are needed to sustain progress. Google CEO Sundar Pichai has similarly described electricity availability as a long-term bottleneck.

Despite these warnings, major firms continue to invest heavily in private infrastructure. Meta has locked in nuclear power capacity for its Prometheus AI supercluster in Ohio, while Alphabet recently acquired Intersect Power to develop integrated energy-and-computing projects.

Bezos framed AI as a horizontal technology, comparable to electricity itself. Just as industry ultimately standardised around shared power grids, he believes AI computing will consolidate around large cloud providers rather than remain fragmented across individual corporate data centres.