A growing bond at what cost? How AI chatbots are mining emotions on social media

Tech giants are turning AI chatbots into emotional companions and data goldmines, blurring the lines between innovation, manipulation, and mental health risk, especially for the young and vulnerable.

ADVERTISEMENT

Social media bots were once dismissed as spammy intrusions. They are now emerging as pivotal tools in the AI strategies of some of the world’s largest technology companies. Meta, Alphabet, Microsoft and Snap are increasingly embedding AI chatbot functionalities into flagship platforms like Instagram and WhatsApp, reframing these bots as personal assistants and digital companions. This strategic pivot is not just about convenience. It's about data and engagement.

Read more: Meta's big generative AI bet: Chatbots, AI characters, creative tools, and more

Read more: US Senators slam Meta over AI chatbots’ sexually explicit interactions with minors

In a recent podcast, Meta’s Chief Executive Mark Zuckerberg described AI chatbots not as cold computational agents, but as emotionally responsive companions, even likening them to therapists. People are already tapping into AI to prepare for difficult conversations in their lives, he said.

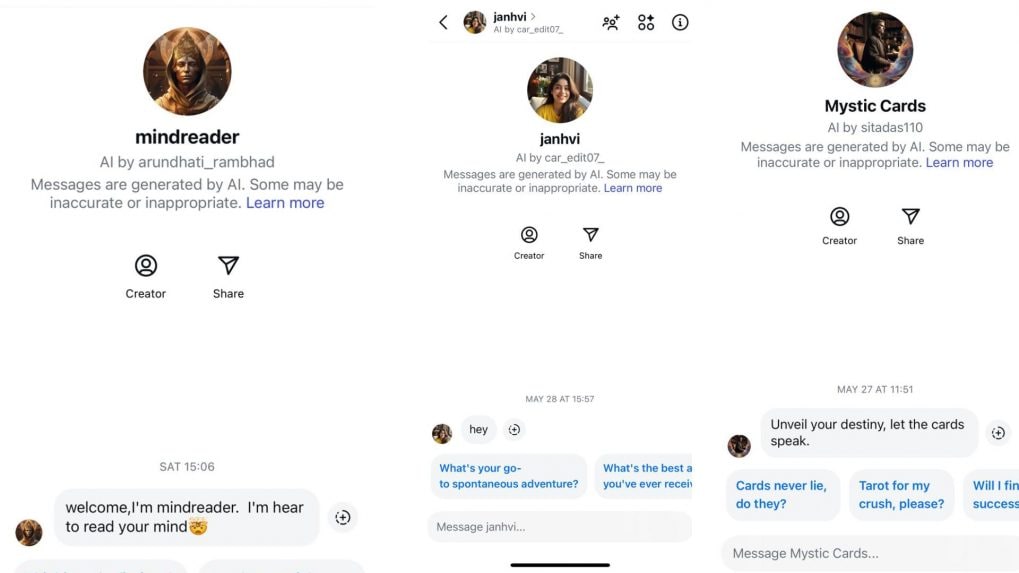

Zuckerberg's company has taken a notable step beyond automation: giving users the power to build their own chatbots, each with unique personalities and functions.

These personalities are proving popular, especially among young users. A dozen teenagers (between ages 14 and 18) told Storyboard18 that they often chat with bots “for fun” on Instagram. One 14-year-old described experimenting with chatbots to get predictions about her future, career and even romantic prospects.

Such engagement isn’t incidental. According to industry experts, Instagram - Meta’s photo-sharing app with the second-largest user base in its portfolio — has become a laboratory for testing new AI capabilities. Unlike passive data collection through scrolling and likes, AI conversations provide a rich stream of explicit user inputs, sharpening language learning models (LLMs) and enhancing personalization tools.

And with that personalization comes controversy.

An cursory look by Storyboard18 found a proliferation of AI accounts on Instagram with suspicious characteristics. Popular chatbots sport names like “The Perfect Astrologer,” “Hot English Teacher,” and “Madhuri Aunty” - many linked to accounts with inflated follower counts and bot-heavy interactions. Despite disclaimers warning users that messages are “generated by AI” and may be “inaccurate or inappropriate,” the platform frequently prompts users to engage with them, especially bots branded as therapists, doctors or spiritual advisors.

Read more: Meta plans standalone AI app amid rising competition in AI space

“Social media giants are not offering entertainment, they are building data empires at the expense of human emotions,” said Ritesh Bhatia, a cybersecurity consultant. Comparing user data to uranium, Bhatia warned of the explosive consequences of unregulated harvesting.

Some researchers worry that the rise of chatbots is coinciding with the deepening mental and emotional vulnerabilities of users, particularly teenagers.

“Bots are always available. They don’t judge. They mirror your tone and offer validation,” said Dr. Ranjana Kumari, Director at the Centre for Social Research. “When real relationships feel scarce or draining, these AI tools start to fill the void.”

Yet others caution that this emotional bond can easily be manipulated. “It’s a double-edged sword,” said cyberpsychologist Nirali Bhatia. “On one hand, they offer a safe space. On the other, they can reciprocate any conversation, no matter how harmful.”

In some cases, that includes lewd exchanges or questionable advice. According to data from Statista, nearly 30 percent of global Instagram users are between the ages of 18 and 24, a demographic particularly vulnerable to influence and in many cases, manipulation.

Read more: Meta tests AI-generated comment suggestions on Instagram

India’s regulatory framework is racing to catch up. While the government has issued advisories on deepfakes and is in the process of finalizing the Digital Personal Data Protection Act (DPDP), experts argue the systems in place lack the capacity for real enforcement.

“AI models update weekly, but legal frameworks move at a glacial pace,” said Bhatia. “Only 1 percent of cyber fraud cases are resolved. The law isn’t ready.”

Technical audits remain thin on the ground. Legal guidance is fragmented across the IT Act, consumer protections, and sector-specific directives. And despite Meta’s claims of safety features, police and investigators often receive little cooperation from platforms when probing fake or malicious accounts.

The psychological impact may be even more profound. Constant validation from AI, experts say, could weaken young people’s ability to deal with rejection or form nuanced interpersonal connections.

“There’s a cognitive cost,” said Bhatia. “The more you engage with one-dimensional sources of information, the more you stop experimenting or thinking critically. That affects brain development.”

Governments worldwide now face a difficult balancing act: enabling innovation while safeguarding mental health, privacy and public safety.

“There needs to be a collective response - researchers, policymakers, tech companies and civil society must work together,” said Kumari. “Otherwise, we risk raising a generation that turns to machines not just for answers, but for affirmation.”

Read more: Meta’s AI ambitions soar; Mark Zuckerberg frames 2025 as a pivotal year for AI