#Social Media Responsibly: When online fame turns risky: platforms face growing scrutiny

Dangerous online stunts and risky challenges are fast becoming a troubling trend across social media platforms, raising urgent questions about safety, responsibility, and accountability.

ADVERTISEMENT

Note to readers:

In an always-on world, the way we use social media shapes not just our lives but the safety and wellbeing of those around us. 'Social Media, Responsibly' is our commitment to raising awareness about the risks of online recklessness, from dangerous viral trends to the unseen mental and behavioural impacts on young people. Through stories, conversations and expert insights, we aim to empower individuals to think before they post, to pause before they share, and to remember that no moment of online validation is worth risking safety. But the responsibility does not lie with individuals alone. Social media platforms must also be accountable for the environments they create, ensuring their tools and algorithms prioritise user safety over virality and profit. It’s time to build a culture where being social means being responsible - together.

Dangerous online stunts and risky challenges are fast becoming a troubling trend across social media platforms, raising urgent questions about safety, responsibility, and accountability. While companies highlight their use of AI moderation and strict community guidelines, experts warn that impressionable users continue to risk their lives for fleeting online recognition. The debate now sits at the intersection of technology, culture, and personal choice — who should bear the greater responsibility for keeping the internet safe?

For many first-time internet users in India, platforms like ShareChat and Moj serve as gateways to digital life. “At ShareChat and Moj, we are working on a unique mission of developing platforms in Indic languages for users across cultural and financial classes in India. For a lot of our users, internet is a relatively new world that they are exploring and learning about. Therefore, the responsibility falls on us to take extra measures to make it a safe place for our users,” a ShareChat spokesperson said.

The company says its mission centers on creating systems that actively discourage unsafe content. It has introduced detailed community guidelines aligned with Indian law, prohibiting content that promotes dangerous acts. To enforce this, ShareChat has deployed a “world class AI moderation framework” designed to detect and block graphic or harmful material, while also employing a team of seasoned moderators to review content and act on user reports.

Still, experts point out that regulation and safety measures must go hand in hand with social awareness. Gayatri Sapru, anthrologist, believes the challenge lies in how individuals perceive risk. “I think it’s sad, but I also think we need to be sensitive and understand that they don’t think they are endangering themselves — they expect it to go well and minimise the risk in their minds which is why they are taking it on. I think it’s always going to be on the individual here,” she said.

Sapru argues that platforms must act proactively, not just reactively. “What can be done to deter this is that platforms should not allow clips of dangerous feats to be featured at all — not only when they go wrong, but at all,” she noted, adding that removing such content from circulation would prevent it from inspiring copycat acts. She also stressed the importance of schools and parents educating minors about the long-term consequences of impulsive decisions, saying that “just saying ‘don’t do it’ is hardly a deterrent.”

Others, like Mohit Hira, co-founder of Myraid Communications, underline the deeper cultural pull of social validation. “This is tragic but seems to be a trend that isn’t slowing down. Unfortunately, there are plenty of impressionable people out there who crave recognition and want a few seconds of fame. Their measure of success is virtual likes even if it comes at the cost of their lives,” he said.

Hira draws a sharp parallel with other areas of public safety. “Consider another parallel: a carmaker cannot be held responsible for a driver who decides to drink and mow down pedestrians. Nor can local police or civic authorities do much by themselves unless there are preventive measures in place proactively to stop drunken people from driving. The responsibility in this case lies with everyone involved but primarily with the person who believes she/he can get away with anything on social media merely to impress others.”

At the same time, he insists that platforms cannot escape accountability. With today’s technology, he said, unsafe content should be identified and taken down within minutes. “In the absence of any punitive action, people get away and become emboldened,” Hira warned. “Honestly, I don’t know what they’re (platforms) doing. But they do need to step up and take their heads out of the sand: a social media platform cannot be blasé about this for much longer.”

What emerges clearly is that the issue cannot be pinned on one actor alone. Experts, platforms, parents, and users all point to different aspects of responsibility, but the debate remains unsettled. As risky trends continue to surface and gain traction, the tension between personal choice, cultural validation, and platform accountability will only deepen.

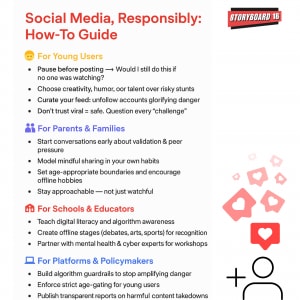

What You Can Do: Practical Steps for Safer Social Media

For Young Users

Pause before posting: Ask yourself—would I still do this if nobody was watching?

Choose safer ways to stand out: Showcase creativity, humor, or talent instead of risky stunts.

Curate your feed: Follow accounts that inspire, educate, or entertain positively. Unfollow or mute pages that glorify dangerous trends.

Check the source: If a challenge looks risky, it probably is. Don’t trust trends just because they’re viral.

For Parents and Families

Open conversations early: Talk about self-worth, validation, and peer pressure before children start using social media.

Model digital responsibility: Kids copy what they see—show them mindful sharing in your own habits.

Set healthy boundaries: Delay access to platforms where possible, and encourage offline hobbies that build confidence.

Be approachable, not just watchful: Teens are more likely to share concerns if they don’t fear punishment.

For Schools and Educators

Integrate digital literacy: Teach students how algorithms work and how online behavior shapes mental health.

Create alternative stages: Give students offline platforms—debates, art exhibitions, performances—where they can shine.

Collaborate with experts: Invite mental health professionals and cyber psychologists for awareness workshops.

For Platforms and Policymakers

Algorithmic guardrails: Stop amplifying content that glorifies danger.

Age-gating enforcement: Ensure stronger checks on underage users.

Transparent reporting: Regularly disclose how much harmful content is removed and how algorithms are being adjusted.

Support cultural shifts: Highlight and reward safe, creative challenges to reset what “going viral” looks like.

Social media doesn’t have to be a stage for reckless validation. By combining personal responsibility, family guidance, institutional education and platform accountability, we can build a culture where being seen online doesn’t come at the cost of being safe offline.

Read More:Google Gemini deemed 'high risk' for kids in new safety report